Do Language Models Count Letter R's?

The Hidden Cost of Tokenization and Preprocessing

Mar 25, 2025

Every time a new large language model drops — be it GPT-4.5, Claude 3.7, or a 72B-parameter open-source beast — the same tired ritual floods tech forums. Someone inevitably posts: "Hey [Model], how many R's are in 'strawberry'?", followed by mockery when the answer fails. But here's the uncomfortable truth: this test measures nothing about intelligence.

When you type "strawberry", the model doesn’t see S-T-R-A-W-B-E-R-R-Y. It sees something else entirely — a fragmented version of your text, reassembled through rules no user ever sees.

The result? A model might claim there are two R’s, or one, or even none. But before declaring it "dumb", consider this: the error isn’t in the model’s logic, but in a silent translation layer operating behind the scenes. The real question isn’t why models fail this test — it’s why we keep administering a benchmark that misunderstands their most basic design constraint.

What exactly happens to your text before the model "sees" it? And why does this invisible process sabotage simple tasks like letter counting? Let’s dissect the unsung culprit: the tokenizer.

What is a Tokenizer?

A tokenizer is the first and most opinionated gatekeeper of your LLM. Think of it as a translator that converts human-readable text into a secret code the model understands — except this translator has strict rules:

-

It fragments text into small pieces called tokens by frequency.

Example input: "Do Language Models Count Letter R's?"

Tokenized: ["Do", " Language", " Models", " Count", " Letter", " R's", "?"] -

It forgets original characters: Once text is tokenized, letters like R become prisoners inside token blocks. The model sees "Letter", not L-e-t-t-e-r.

-

It uses a dictionary to map tokens to integer IDs.

Example token to ID mapping (illustrative):- "Do" -> 1547

- " Language" -> 2938

- " Models" -> 2102

- " Count" -> 867

- " Letter" -> 1512

- " R's" -> 1229

- "?" -> 30

Try this interactively at Tiktokenizer

Decoder-Only LLM's Minimalist Pipeline

[Input] → [Tokenizer] → [Core Processing] → [Tokenizer] → [Output]Let’s try the sentence: "Count R's in 'strawberry'?"

1. Input → Tokenizer

Tokenizer breaks it down into:

["Count", " R's", " in", " 'st", "raw", "berry", "'?"],

Then maps each to token IDs and feeds into the core model.

2. Core Processing

This black box processes the input token sequence (e.g., [867, 1229, 451, 9102, 2981, 1104, 341]) and outputs a predicted sequence (e.g., [2341, 15, 132]). These are mapped from statistical associations in its training data.

3. Tokenizer (Output Phase)

The output token IDs get decoded back to human-readable text.

For example: [2341, 15] → "Answer: 2"

The Brutal Reality

The model never truly saw the full word — it guessed based on token relationships.

CLIP’s Hidden Preprocessor

Just as LLMs don't see raw text, vision models like CLIP never truly "see" your images. Here’s the hidden distortion pipeline:

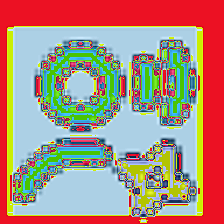

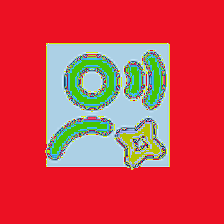

[Your Image] → [CLIP Preprocessor] → [Model "Sees"] → [Output]CLIP promises a unified way to process images — but its standardized 224x224 input hides distortions that vary by source resolution and DPI. Let’s compare two icons through the CLIP pipeline.

Case Study: Two Displays, Two Realities

Image 1: Old Apple Cinema Display (2K)

- Source: 64 x 85 pixel icon (legacy, low DPI)

- Preprocessing:

- Resize to 224x224 (forced square aspect ratio)

- Bilinear interpolation → jagged artifacts

- Normalize RGB → unnatural contrast

Jagged edges dominate. The original circle now resembles a hexagon.

Image 2: Modern MacBook Pro (5K)

- Source: 104 x 98 pixel icon (modern, high DPI)

- Preprocessing:

- Resize to 224x224 → minimal distortion

- Same interpolation → smooth edges

- Normalization preserves shape

Curves remain intact. The model sees a more faithful representation.

Key Takeaways: The Input Pipeline Paradox

- Reality ≠ Model Input

- Text models see tokenized fragments (e.g., "straw" + "berry"), not raw characters

- Vision models see resized, normalized images (e.g., 224px square artifacts), not your original pixels

The Core Lesson

A model’s competence depends as much on its input pipeline as its architecture. What we call "AI errors" are often preprocessing side effects.

References

- Tiktokenizer: Visualize how different tokenizers fragment text

- CLIP Preprocessing (Wikipedia): Resizing, normalization, and impact on fidelity

- Karpathy: Deep Dive into LLMs like ChatGPT: General audience talk breaking down LLM architecture

- Reddit: Why do so few persons understand why the strawberries question is so hard to answer for an llm?: LocalLLaMA community discussion on the "strawberry" question